Manage Batch Jobs with AWS Batch

Manage Batch Jobs with AWS Batch

AWS Batch allows us to run batch workloads without managing any compute resources. Although newer services such as ECS might be more appealing, we are going to take a deeper look at what AWS Batch provides and along the way we will deploy a sample Batch example using AWS CDK. In case you haven’t heard CDK, we would recommend checking our series of tutorials related to CDK.

AWS Batch dynamically provisions the optimal quantity and type of computing resources (e.g., CPU or memory optimized instances) based on the volume and specific resource requirements of the batch jobs submitted. Spot instances can also be leveraged to save some money. After your job is finished, Batch can terminate the instances according to your needs.

Let’s deploy a simple Batch application to explore the service little further.

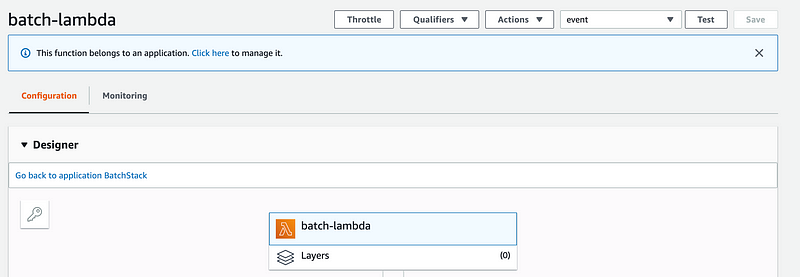

We will create a VPC, necessary IAM roles, Security Group, Job Definition, Job Queue, Compute Environment and Lambda Function for submitting a job every 4 hours via the Cloudwatch Events.

Job Queue: Jobs are submitted to a job queue, where they reside until they can be scheduled to run in a compute environment.

Job Definition: Specifies how jobs are going to run. While each job must reference a job definition, many of the parameters that are specified in the job definition can be overridden at runtime

Computer Environments: Job queues are mapped to one or more compute environment. Has two types: Managed and Unmanaged Compute Environments

Managed Compute Environments: Batch manages the capacity and instance types of the compute resources within the environment, based on the compute resource specification that you define when you create the compute environment

Unmanaged Compute Environments: In an unmanaged compute environment, you manage your own compute resources. You must ensure that the AMI you use for your compute resources meets the AMI specification.

For deploying;

# Install dependencies

npm install

# Edit .env for environment variables

vim .env

# Deploy

cdk deploy

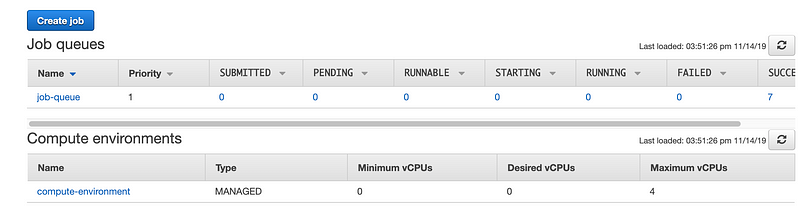

After we’ve deployed the stack, we can head over to the Batch console and see the newly created resources.

Since we’ve have defined minimum vCPU as 0, there will be no compute resources created for us when no jobs running. To see how Batch handles the creation of necessary compute resources, go to the Lambda console and run the scheduler function manually.

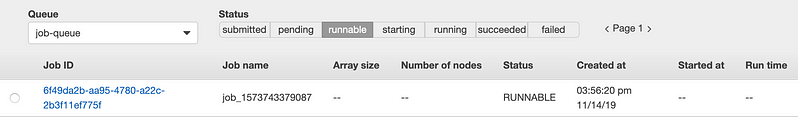

You should see the newly created job in the Jobs section under Runnable tab. That means the job is scheduled to run and it is awaiting necessary compute resources to become active.

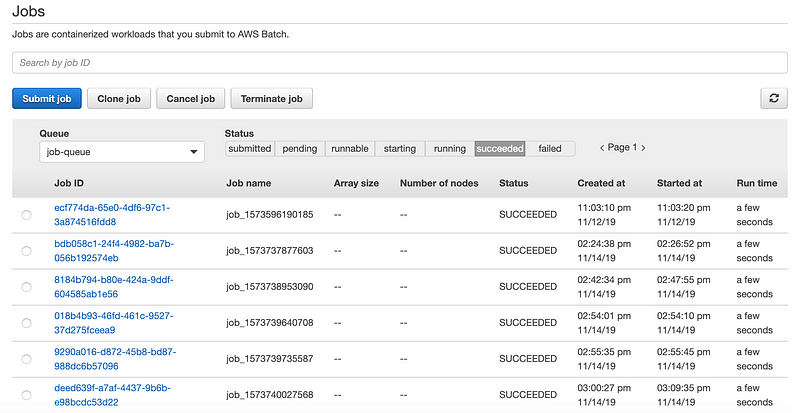

After some time your job will be executed and you can see it under the Succeeded tab.

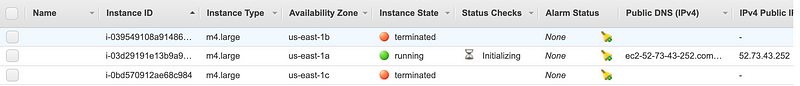

Now head over to the EC2 console and check for the current instances, you will see that one instance is running. When Batch finishes the job it will terminate the instance. When a new job is submitted again, a new instance will be created for the job.

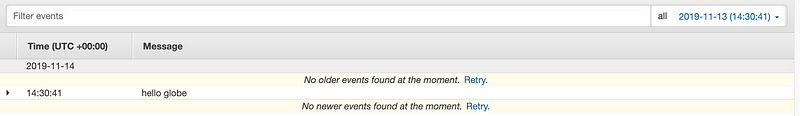

You can also check the logs for Batch Job on Cloudwatch Console.

That’s it, we’ve reached the end of this short tutorial. You can create different workloads and see which resources Batch will create for you. Batch handles job execution and compute resource management, allowing us to focus more on developing business-critical applications rather than setting up and managing complex resource combinations. AWS does not charge anything related to Batch, you only pay for the resources that you use.

When you are done with the stack don’t forget to delete it via CDK CLI.

# Destroy the stack

cdk destroy

The completed project can be found here.