AWS Backups for Centralized Backup Solution

AWS Backups for Centralized Backup Solution

AWS Backup is a fully managed backup service that makes it easy to centralize and automate the backup of data across AWS services in the cloud as well as on-premises environments. This service facilitates the configuration of backup policies and monitoring of activities for all your AWS resources within a single interface. By automating and consolidating backup tasks, which were previously performed on a service-by-service basis, it eliminates the need for creating custom scripts and manual processes

Overview of AWS Backup

AWS Backup allows you to create backup policies that automate the backup of your data across AWS services such as Amazon EC2, Amazon EBS, Amazon RDS, Amazon DynamoDB, Amazon S3, Amazon EFS, and AWS Storage Gateway. You can also use AWS Backup to back up data in on-premises environments and other cloud environments. AWS Backup makes it easy to create, manage, and monitor backups, as well as to restore data when needed.

Key features of AWS Backup include:

Automated backup policies: AWS Backup allows you to create backup policies that automate the backup of your data according to your desired schedule and retention policies.

Cross-region and cross-account backup: You can use AWS Backup to create backups of your data across multiple AWS regions and accounts.

Centralized backup management: AWS Backup provides a single dashboard where you can create, manage, and monitor backups across multiple AWS services and accounts.

Backup monitoring and reporting: AWS Backup provides metrics and alerts to help you monitor the health and performance of your backups, as well as reporting on backup compliance and costs.

Flexible restore options: AWS Backup allows you to restore your data to its original location or to a different location, and you can restore individual files or entire volumes or databases.

Legal Hold: A legal hold is an administrative tool that helps prevent backups from being deleted while under a hold. While the hold is in place, backups under a hold cannot be deleted and lifecycle policies that would alter the backup status (such as transition to a Deleted state) are delayed until the legal hold is removed. A backup can have more than one legal hold.

In this tutorial, I will provide an overview of AWS Backup and a step-by-step guide to creating and managing backups in AWS Backup. I will use Cloud Development Kit(CDK) to provision necessary cloud resources.

If you don’t have the CDK installed, please refer to this documentation.

import * as cdk from "aws-cdk-lib";

import * as rds from "aws-cdk-lib/aws-rds";

import * as backup from "aws-cdk-lib/aws-backup";

import * as dynamodb from "aws-cdk-lib/aws-dynamodb";

import * as ec2 from "aws-cdk-lib/aws-ec2";

import { Backup } from "cdk-backup-plan";

import { config } from "dotenv";

import { Construct } from "constructs";

import { version } from "process";

config();

class AWSBackupStack extends cdk.Stack {

constructor(scope: Construct, id: string, props?: cdk.StackProps) {

super(scope, id, props);

const dynamoTable = new dynamodb.Table(this, "dynamo-table", {

tableName: "dynamo-table",

partitionKey: { name: "id", type: dynamodb.AttributeType.STRING },

billingMode: dynamodb.BillingMode.PAY_PER_REQUEST,

removalPolicy: cdk.RemovalPolicy.DESTROY

});

const vpc = ec2.Vpc.fromLookup(this, "vpc", {

isDefault: true,

});

// We define the instance details here

const ec2Instance = new ec2.Instance(this, 'Instance', {

vpc,

instanceType: ec2.InstanceType.of(ec2.InstanceClass.T3, ec2.InstanceSize.NANO),

machineImage: new ec2.AmazonLinuxImage({ generation: ec2.AmazonLinuxGeneration.AMAZON_LINUX_2 })

});

const auroraCluster = new rds.ServerlessCluster(this, "rds-cluster", {

engine: rds.DatabaseClusterEngine.AURORA_POSTGRESQL,

enableDataApi: true,

parameterGroup: rds.ParameterGroup.fromParameterGroupName(this, 'ParameterGroup', "default.aurora-postgresql10"),

});

const dynamoBackup = new Backup(this, "dynamo-backup", {

backupPlanName: "dynamo-backup",

backupRateHour: 2,

backupCompletionWindow: cdk.Duration.hours(2),

resources: [backup.BackupResource.fromDynamoDbTable(dynamoTable)],

});

const auroraBackup = new Backup(this, "aurora-backup", {

backupPlanName: "aurora-backup",

backupRateHour: 2,

backupCompletionWindow: cdk.Duration.hours(2),

resources: [backup.BackupResource.fromRdsServerlessCluster(auroraCluster)]

});

const ec2Backup = new Backup(this, "ec2-backup", {

backupPlanName: "ec2-backup",

backupRateHour: 2,

backupCompletionWindow: cdk.Duration.hours(2),

resources: [backup.BackupResource.fromEc2Instance(ec2Instance)]

});

new cdk.CfnOutput(this, "dynamo-backup-id", {

value: dynamoBackup.backupPlan.backupPlanId

});

new cdk.CfnOutput(this, "aurora-backup-id", {

value: auroraBackup.backupPlan.backupPlanId

});

new cdk.CfnOutput(this, "ec2-backup-id", {

value: ec2Backup.backupPlan.backupPlanId

});

}

}

const app = new cdk.App();

new AWSBackupStack(app, "AWSBackupStack", {

env: {

account: process.env.AWS_ACCOUNT_ID,

region: process.env.AWS_REGION

}

});Let’s go over through the code.

- We are creating DynamoDB table, EC2 Instance, and a Serverless Aurora Cluster.

- We are using cdk-backup-plan Construct from aws-samples Github account. Which is a reusable Backup CDK Construct.

- We are outputting the backupPlanId which is created by the cdk-backup-plan Construct.

// if the CDK environment doesn't exist

$ cdk bootstrap

// Deploy

$ cdk deploy

✅ AWSBackupStack

✨ Deployment time: 236.18s

Outputs:

AWSBackupStack.aurorabackupBackupPlanArn4DB2EC59 = arn:aws:backup:us-west-1:xxxxxx:backup-plan:9b3fd8e4-81c0-4469-93d6-97729cbd2ca3

AWSBackupStack.aurorabackupBackupPlanIdE271B702 = 9b3fd8e4-81c0-4469-93d6-97729cbd2ca3

AWSBackupStack.aurorabackupid = 9b3fd8e4-81c0-4469-93d6-97729cbd2ca3

AWSBackupStack.dynamobackupBackupPlanArn74D54B83 = arn:aws:backup:us-west-1:xxxxxx:backup:backup-plan:606648ee-b6a1-47ad-bfc5-e6e0256b51ae

AWSBackupStack.dynamobackupBackupPlanIdCE0A7320 = 606648ee-b6a1-47ad-bfc5-e6e0256b51ae

AWSBackupStack.dynamobackupid = 606648ee-b6a1-47ad-bfc5-e6e0256b51ae

AWSBackupStack.ec2backupBackupPlanArn2A537E57 = arn:aws:backup:us-west-1:xxxxxx:backup:backup-plan:0935cb07-de96-4266-84b0-0250d8fe53c3

AWSBackupStack.ec2backupBackupPlanIdA9462D34 = 0935cb07-de96-4266-84b0-0250d8fe53c3

AWSBackupStack.ec2backupid = 0935cb07-de96-4266-84b0-0250d8fe53c3

Stack ARN:

arn:aws:cloudformation:us-west-1:xxxxxx:backup:stack/AWSBackupStack/4dd69c80-b0ab-11ed-87b4-06602603bd5b

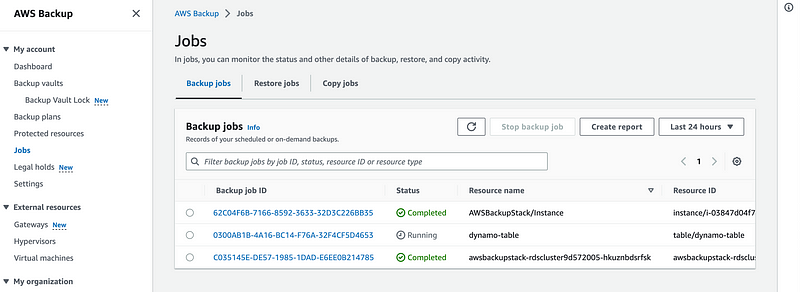

Let’s check the AWS Backup console to see the backups plans.

Great, now we can manage multiple backups in one place, there is no need to handle a backup for each service individually. You can leverage AWS Backups to organize your backups.

Once you are done with this tutorial, don’t forget to remove the cloud resources. You should also go to Backup console and remove the vaults as they will not get deleted by cdk destroy command.

cdk destroyIn this tutorial, we have explored AWS Backups service. AWS Backups is a great solution if you want a centralized, end-to-end solution for business and regulatory compliance requirements.

Are you ready to enhance your AWS Cloud journey? Head over to our website and book a free consultation call